PROJECTS

MY PROJECTS

Click the icons to learn more

AI NPC for MMORPG Combat

An AI NPC learning model that can simulate behaviors of real human players using records for MMORPG Combat

Desktop Game - Bear with Me

A physics puzzle game with music elements built in libGDX

Toon Shader

A shader with color quantization, strokes, outline, and some other toon shading effects

Human Body Model from HoloLens Sensor

A human body model constructed from HoloLens sensor data

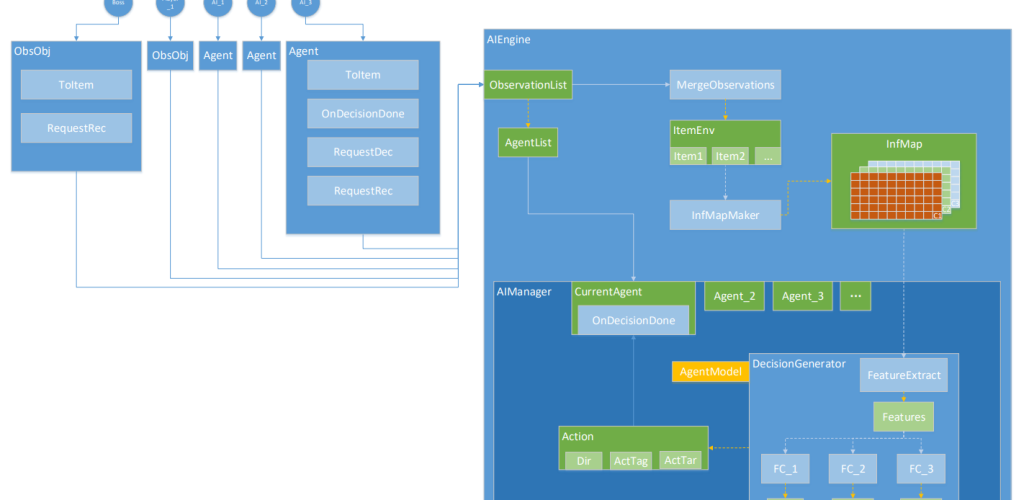

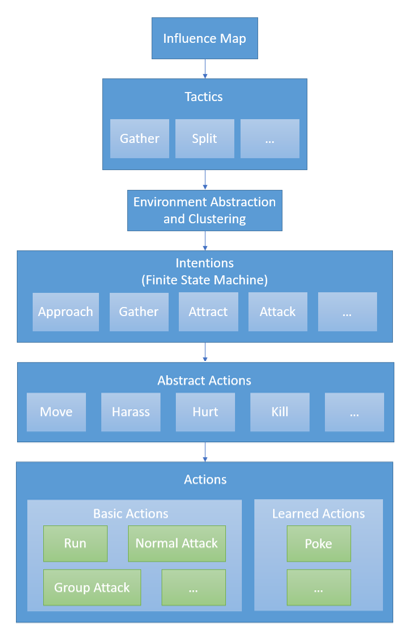

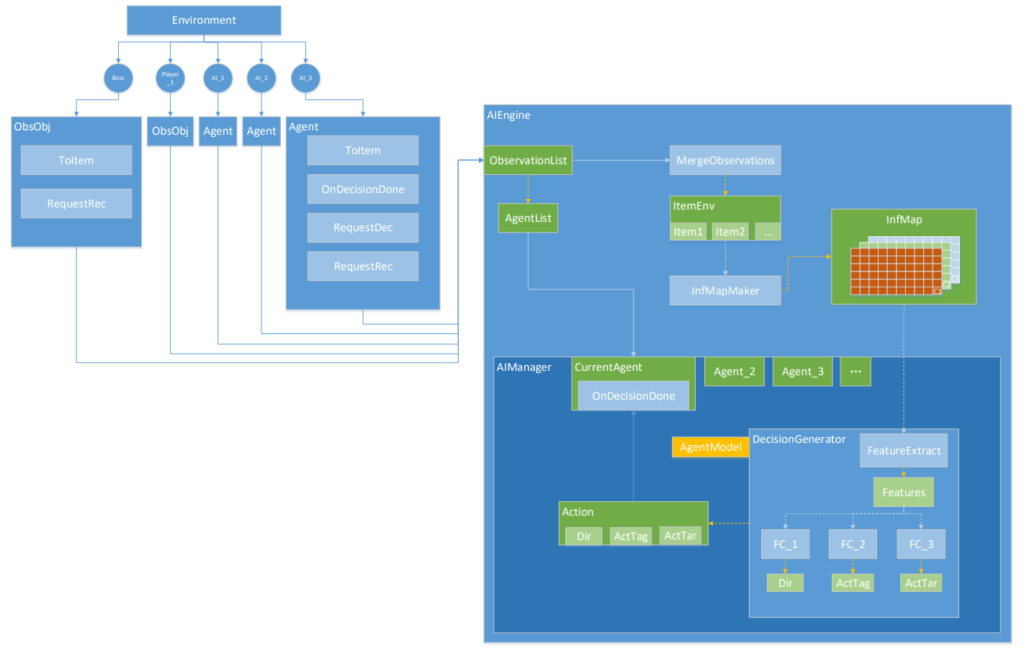

AI NPC for MMORPG Combat

Shengqu Games, 2020.6 – 2020.8

An AI NPC learning model that can simulate behaviors of real human players using records for MMORPG Combat

* This model was developed together with Xingyu Ai from Shengqu Games.

1. Single AI NPC

– Influence Map

Split the map into nine blocks and calculate the sparsity, difficulty, and diversity for each block. Then, find the human-labeled influence map with the highest similarity and apply its tactic (if the sample size is large enough, this phase can be replaced by deep learning). Only recalculate the influence map and choose new tactic when the previous block is cleared.

– Tactics

Classify groups of recorded samples as different tactics. When a tactic is determined by the influence map, only samples within that specific tactic will be used.

– Environment Abstraction and Clustering

Cluster the enemies into two groups according to their distance or engagement with the player. When there is one enemy left, only one cluster is formed. At each decision step, if the executing sample record is finished or the difference between the current clustering and executing sample clustering is large enough, a new closest record sample with acceptable intention is found using a specified environment similarity formula.

– Intentions

The intentions are controlled by a finite state machine with certain transfer requirements, so that the adjacent movements of the AI player are consistent.

– Abstract Actions

The abstract actions are used to determine whether the movement of current sample record is finished or not.

– Actions

There are two kinds of actions: basic actions that are directly implemented in the game and learned actions that are combinations of basic actions. Each learned action has its own set of sample records consisting of basic actions.

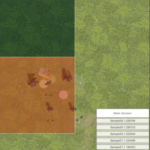

– Demo (Unity)

The 3 by 3 dark green blocks in the beginning indicate the influence map, with orange representing how much influence each block has. The first tactic the AI model chooses is gather, and after the first block is cleared, the influence map is recalculated with split being the next tactic. The pink circles indicate the current clustering centers, the green circles indicate the picked sample record clustering centers, the white circle indicates the target position, and the yellow bar indicates the target direction.

2. Multi-agent AI NPC

– Influence Map

The influence map is stored as a color image, with the red pixel representing the level of enemy threat, green pixel representing the power of players on the AI’s side, and blue pixel representing the potential gain if heading towards that block.

– Action

Each action output has three attributes. Direction is a vector of length nine indicating where to move and if it is a skill action, then all values should be zero. Action tag is a vector including skill information such as whether it is a single attack or group attack and whether it requires cooldown or not. Finally, action target shows the target position of the skill.

– Future Work

Deep learning can be applied to learn actions from influence map images using sample records as training data.

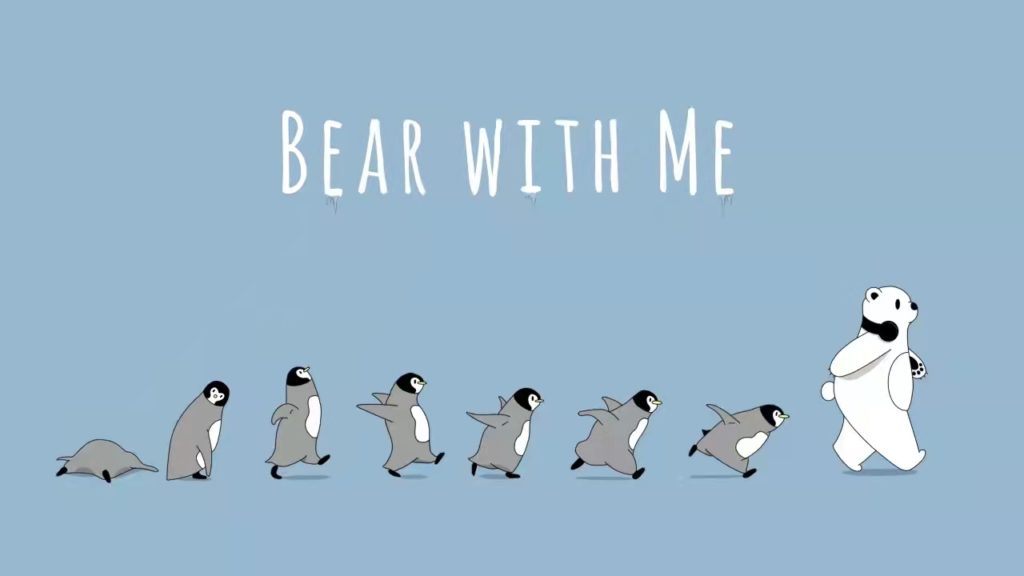

Desktop Game -

Bear with Me

Cornell University, 2021.2 – 2021.5

A physics puzzle game with music elements built in libGDX

* This game was developed together with a group of students from Cornell Univeristy (credits below). I acted as software lead in the team.

1. Game Concept

During the ice age, a polar bear embarks on a journey to visit its penguin friend, whose a cappella group members have been caught by humans. The polar bear decides to reunite the a cappella group and to have them perform again. The player, acting as the polar bear, paves paths through the floating ice, explores six different continents, and rescues the jailed musical penguins by finding notes, which act as keys for the cells. The rescued penguins can then act as helpers, while the polar bear embarks on his journey to the South Pole. The main features are

- Chuck penguins to knock down icicles to pave your way among floating ices

- Glean the scattered notes to collect music stems and rescue jailed penguins

- Traverse the world to explore the scenic background

2. Design Philosophy

– Strategic Gameplay

The penguins are smaller and more agile than the polar bear, enabling them to reach places that are unreachable to the polar bear alone. On the other hand, the polar bear is much stronger than the penguins, so it can throw the penguins to great heights and long distances to collect notes. The player has to plan beforehand on how to balance the polar bear and penguins’ different strengths to reach the exit together safely.

– Relaxing and Casual Experience

Through cute visual design, melodious music, and the friendly and cooperative bonding between the polar bears and the penguins, this game provides a relaxing and casual experience. The whole game’s art style includes colorful vector art, paired with the smooth a cappella background music, composing a casual atmosphere for the player.

– Sense of Accomplishment

A unique music system is implemented in the game, where the player is rewarded a music stem on each continent. The level music starts off very barebones, with only ambience, backing strings, and a melody. Every time a continent is completed, a music stem with a new instrument is introduced. This will go on until all the penguins are collected, at which point the song will be fully complete to represent the growth of the polar bear throughout the journey.

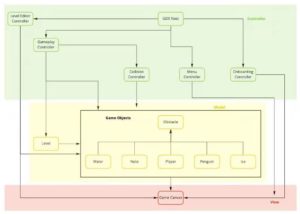

3. Code Architecture

– Dependency Diagram

This diagram shows the relationship between the modules we implemented. The boxes represent modules, and the edges represent their collaboration relationship.

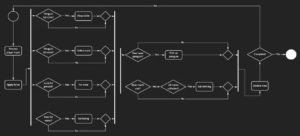

– Activity Diagram

This diagram outlines the flow of the application update loop over time.

– Click the image to view in big picture! –

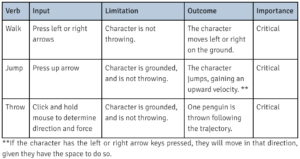

4. Player Actions

The player can walk left and right, jump, and throw penguins. To knock down icicles or reach notes out of range, the player can throw penguins in a certain direction at a determined force, and a trajectory will be shown to indicate the penguin’s path.

– Click the image to view in big picture! –

6. Credits

Software Lead, Programmer

– Yiheng Dong –

Project Lead, Programmer

– Zili Zhou –

Design Lead

– Sirius Liu –

Audio Lead

– Lucas James Cusati –

Designer

– Xiaoyao Yao –

Programmer

– Junxi Song –

– Juliane Phoebe Tsai –

– Haomiao Liu –

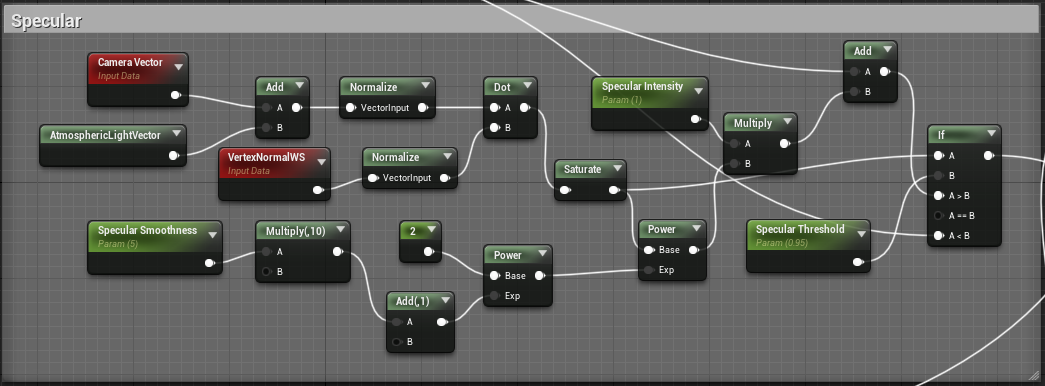

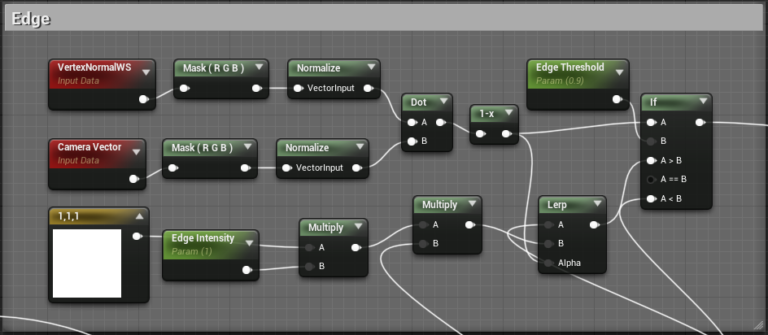

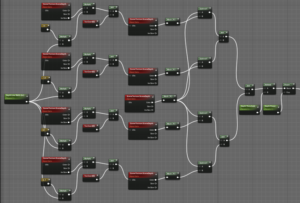

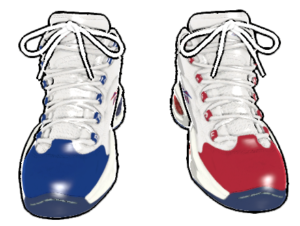

Toon Shader

Visual Concepts, 2021.6 – 2021.7

A shader with color quantization, strokes, outline, and some other toon shading effects

* The actual shader is implemented in HLSL for VC’s own game engine, but for privacy reasons, only the logic is showed in UE4’s shader graphs.

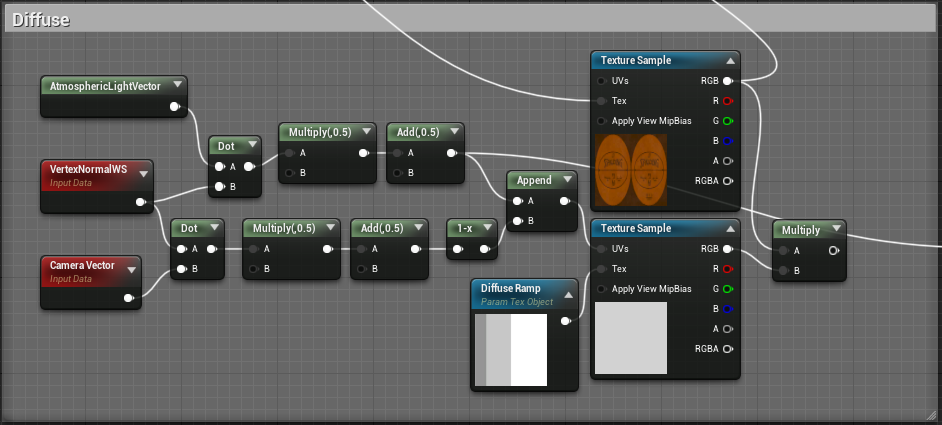

1. Diffuse (Color Quantization)

– Implementation

For a 2D diffuse ramp, use the dot product of light vector and normal vector as the value along the horizontal axis and dot product of camera vector and normal vector as the value along the vertical axis to locate a pixel on the ramp. Then, multiply the original color by this pixel value.

– Effect (UE4)

2. Stroke

– Implementation

Set light intensity threshold for each stroke texture. Then, use the dot product of light vector and normal vector as light intensity to sample a pixel on the corresponding stroke texture, and interpolate neighboring strokes to smooth the boundaries.

– Effect (VC’s game engine)

5. Depth Outline with Anti-Aliasing

– Implementation

Set depth line width (integer) and depth threshold. Then, sum up the depth differences between each pixel and its neighboring pixels. If it exceeds the threshold, draw outline at that pixel.

For each outline pixel, the closer it is to the end of edge, the larger intensity of blurring. (Anti-aliasing implementation images from: http://blog.simonrodriguez.fr/articles/2016/07/implementing_fxaa.html)

– Effect (VC’s game engine)

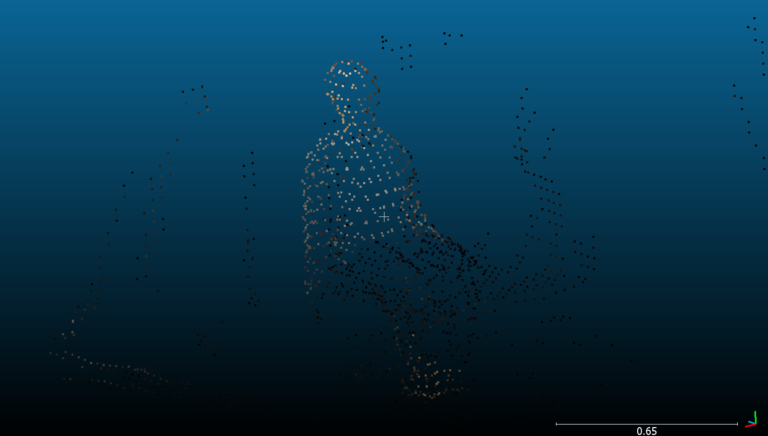

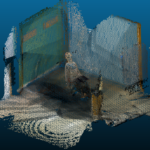

Human Body Model from HoloLens Sensor

Cornell University, 2021.9 – 2021.12

A human body model constructed from HoloLens sensor data

* This project is supervised by Professor Bharath Hariharan and Harald Haraldsson.

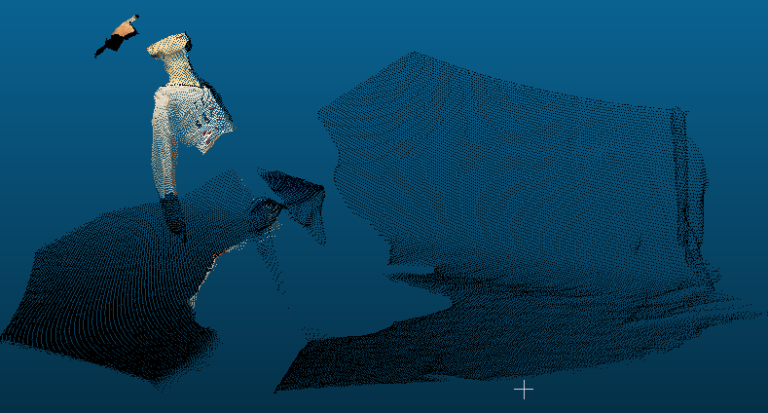

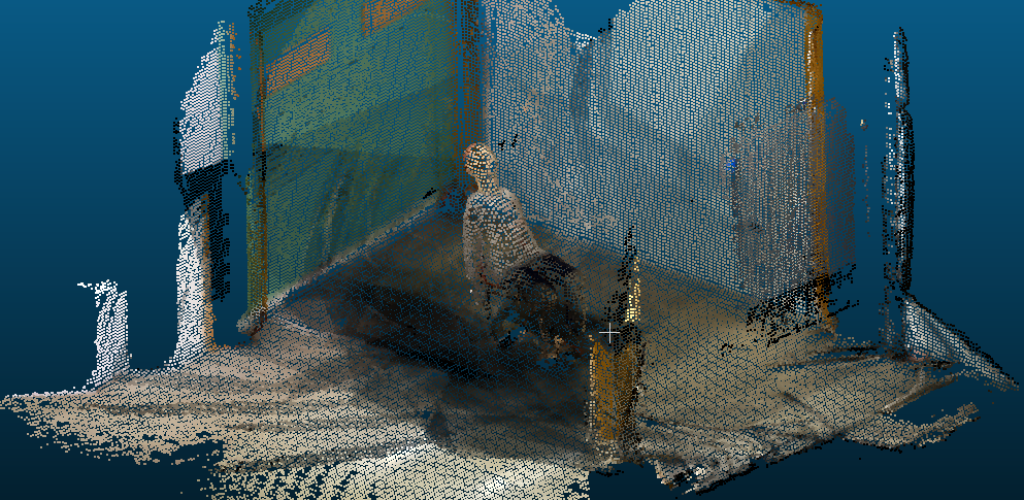

1. Partial Point Clouds

– Data Collection

By walking around the room and interacting with the human model, plenty of depth frames and RGB frames are collected.

– Image Segmentation

To separate the human related points, Mask R-CNN, a convolutional neural network that produces bounding boxes and segmentation masks for each detected object, is run on all raw RGB images. The segmentation mask for the “person” label is selected and only the “person” pixels are colored.

– Space Reconstruction

After processing all raw RGB images, each depth image is paired with the RGB image that has the closest time stamp. Using the stored camera calibration parameters, part of the room can be reconstructed as a point cloud.

– Top: Original RGB Image

– Middle: Segmented RGB Image

– Bottom: Partial Point Cloud

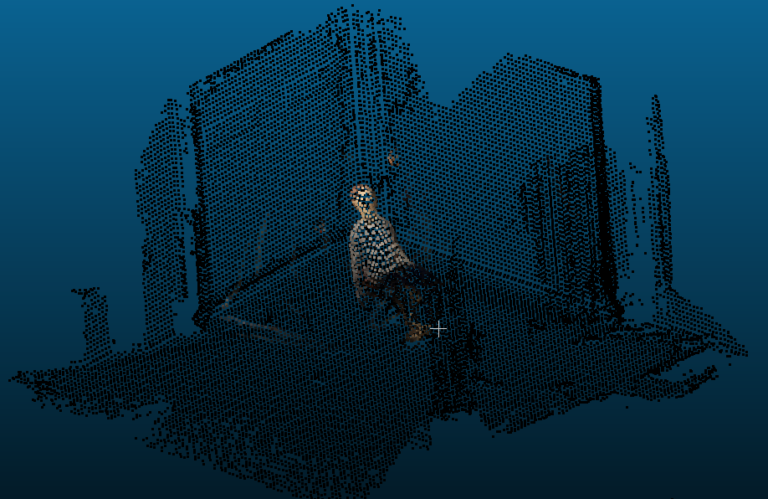

2. Detailed Volume Integration

– TSDF Integration

Using the TSDF volume integration algorithm, a point cloud model for the whole room is generated. With different voxel size, the density and cleanness of the model varies. The smaller the voxel size is, the denser the point cloud is, but containing more noises. To avoid the noise, 0.04 is chosen as the voxel size.

– Refining

Since this model is used in medical situations, detailed information, such as eyes, is necessary. The TSDF integrated version with black points filtered out is way too sparse. Partial point clouds are then looped and distances between points in partial point clouds and points in integrated point cloud are calculated. If the minimum distance is under 0.04, the point is added to the integrated model to complement details.

– Top: TSDF Integration

– Middle: Filtered Point Cloud

– Bottom: Final Detailed Model

Contact

Please contact me with the following information

- dongyiheng@hotmail.com

- +1 (607) 262 - 4343 (United States)

- +86 18916723701 (China)